Chapter 8. Testing for Differences in Mean and Proportion: Independent Samples t-test

Independent Samples t-test: Test Statistic and p-value

Independent Samples t-test: Test Statistic and p-value

Independent Samples t-test: Test Statistic

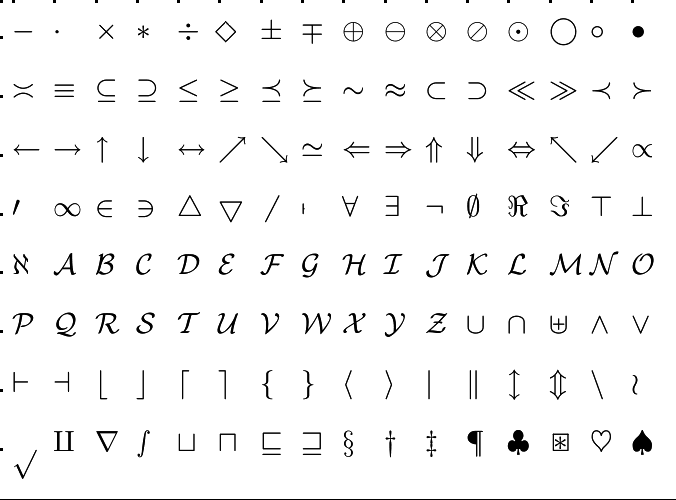

The test statistic of an independent samples #t#-test is denoted #t# and is computed with the following formula:

\[t=\cfrac{(\bar{X}_1-\bar{X}_2) - (\mu_1 - \mu_2)}{s_{(\bar{X}_1 - \bar{X}_2)}} = \cfrac{\bar{X}_1-\bar{X}_2 }{\sqrt{\cfrac{s^2_1}{n_1}+\cfrac{s^2_2}{n_2}}}\]

where #s_{(\bar{X_1} - \bar{X_2})}# is the estimated standard error of the mean difference.

Under the null hypothesis of an independent samples #t#-test, the #t#-statistic follows the #t_{df}#-distribution, but the exact degrees of freedom #df# involves a complicated formula.

We will use a simpler, more conservative value: #df# is the smaller of #n_1-1# and #n_2-1#.

\[df=min(n_1-1, n_2-1)\]

Calculating the p-value of an Independent Samples t-test with Statistical Software

The calculation of the #p#-value of an independent samples #t#-test is dependent on the direction of the test and can be performed using either Excel or R.

To calculate the #p#-value of an independent samples #t#-test for #\mu_1-\mu_2# in Excel, make use of one of the following commands:

\[\begin{array}{llll}

\phantom{0}\text{Direction}&\phantom{000000}H_0&\phantom{000000}H_a&\phantom{0000000}\text{Excel Command}\\

\hline

\text{Two-tailed}&H_0:\mu_1 - \mu_2 = 0&H_a:\mu_1 - \mu_2 \neq 0&=2 \text{ * }(1 \text{ - } \text{T.DIST}(\text{ABS}(t),df,1))\\

\text{Left-tailed}&H_0:\mu_1 - \mu_2 \geq 0&H_a:\mu_1 - \mu_2 \lt 0&=\text{T.DIST}(t,df,1)\\

\text{Right-tailed}&H_0:\mu_1 - \mu_2 \leq 0&H_a:\mu_1 - \mu_2 \gt 0&=1\text{ - }\text{T.DIST}(t,df,1)\\

\end{array}\]

Where #df=\text{MIN}(n_1\text{ - }1, n_2\text{ - }1)#.

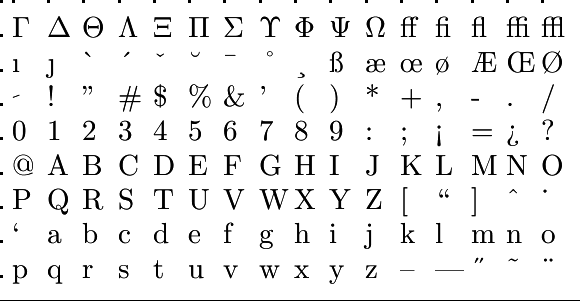

To calculate the #p#-value of an independent samples #t#-test for #\mu_1 - \mu_2# in R, make use of one of the following commands:

\[\begin{array}{llll}

\phantom{0}\text{Direction}&\phantom{000000}H_0&\phantom{000000}H_a&\phantom{00000000000}\text{R Command}\\

\hline

\text{Two-tailed}&H_0:\mu_1 - \mu_2 = 0&H_a:\mu_1 - \mu_2 \neq 0&2 \text{ * }\text{pt}(\text{abs}(t),df,lower.tail=\text{FALSE})\\

\text{Left-tailed}&H_0:\mu_1 - \mu_2 \geq 0&H_a:\mu_1 - \mu_2 \lt 0&\text{pt}(t,df, lower.tail=\text{TRUE})\\

\text{Right-tailed}&H_0:\mu_1 - \mu_2 \leq 0&H_a:\mu_1 - \mu_2 \gt 0&\text{pt}(t,df, lower.tail=\text{FALSE})\\

\end{array}\]

Where #df=\text{min}(n_1\text{ - }1, n_2\text{ - }1)#.

If #p \leq \alpha#, reject #H_0# and conclude #H_a#. Otherwise, do not reject #H_0#.

A total of #76# subjects are recruited. Approximately half of the subjects are given the easy-to-read text #(X_1)# and the other half are given the hard-to-read text #(X_2)#. Both groups are given #20# minutes to study the text, after which they are tested on how well they remember what they have read.

The psychologist plans on using an independent samples #t#-test to determine whether there is a significant difference in the memory performance between the two groups, at the #\alpha = 0.04# level of significance.

The psychologist obtains the following results:

| Easy-to-read #(X_1)# | Hard-to-read #(X_2)# |

|

\[\begin{array}{rcl} |

\[\begin{array}{rcl} |

Calculate the #p#-value of the test and make a decision regarding #H_0: \mu_1 - \mu_2 = 0#. Round your answer to #3# decimal places.

#p=0.279#

On the basis of this #p#-value, #H_0# should not be rejected, because #\,p# #\gt# #\alpha#.

There are a number of different ways we can calculate the #p#-value of the test. Click on one of the panels to toggle a specific solution.

Compute the estimated standard error of the mean difference:

\[s_{(\bar{X}_1-\bar{X}_2)} = \sqrt{\cfrac{s^2_1}{n_1}+\cfrac{s^2_2}{n_2}} = \sqrt{\cfrac{2.6^2}{36}+\cfrac{3.7^2}{40}} = 0.72803\]

Compute the #t#-statistic:

\[t=\cfrac{\bar{X_1}-\bar{X_2}}{s_{(\bar{X}_1-\bar{X}_2)}}=\cfrac{32.0 - 32.8}{0.72803}=-1.0989\]

Determine the degrees of freedom:

\[df = min(n_1-1, n_2-1) = min(35, 39)=35\]

Since both #n_1# and #n_2# are considered large (#\gt 30#), the Central Limit Theorem applies and we know that the test statistic

\[t=\cfrac{\bar{X_1}-\bar{X_2}}{ \sqrt{\cfrac{s^2_1}{n_1}+\cfrac{s^2_2}{n_2}}}\]

approximately has the #t_{df} = t_{35}# distribution, under the assumption that #H_0# is true.

To calculate the #p#-value of a #t#-test, make use of the following Excel function:

T.DIST(x, deg_freedom, cumulative)

- x: The value at which you wish to evaluate the distribution function.

- deg_freedom: An integer indicating the number of degrees of freedom.

- cumulative: A logical value that determines the form of the function.

- TRUE - uses the cumulative distribution function, #\mathbb{P}(X \leq x)#

- FALSE - uses the probability density function

Since we are dealing with a two-tailed #t#-test, run the following command to calculate the #p#-value:

\[

=2 \text{ * }(1 \text{ - } \text{T.DIST}(\text{ABS}(t),df,1))\\

\downarrow\\

=2 \text{ * }(1 \text{ - } \text{T.DIST}(\text{ABS}(\text{-}1.09886), 35,1))

\]

This gives:

\[p = 0.279\]

Since #\,p# #\gt# #\alpha#, #H_0: \mu_1 - \mu_2 = 0# should not be rejected.

Compute the estimated standard error of the mean difference:

\[s_{(\bar{X}_1-\bar{X}_2)} = \sqrt{\cfrac{s^2_1}{n_1}+\cfrac{s^2_2}{n_2}} = \sqrt{\cfrac{2.6^2}{36}+\cfrac{3.7^2}{40}} = 0.72803\]

Compute the #t#-statistic:

\[t=\cfrac{\bar{X_1}-\bar{X_2}}{s_{(\bar{X}_1-\bar{X}_2)}}=\cfrac{32.0 - 32.8}{0.72803}=-1.0989\]

Determine the degrees of freedom:

\[df = min(n_1-1, n_2-1) = min(35, 39)=35\]

Since both #n_1# and #n_2# are considered large (#\gt 30#), the Central Limit Theorem applies and we know that the test statistic

\[t=\cfrac{\bar{X_1}-\bar{X_2}}{ \sqrt{\cfrac{s^2_1}{n_1}+\cfrac{s^2_2}{n_2}}}\]

approximately has the #t_{df} = t_{35}# distribution, under the assumption that #H_0# is true.

To calculate the #p#-value of a #t#-test, make use of the following R function:

pt(q, df, lower.tail)

- q: The value at which you wish to evaluate the distribution function.

- df: An integer indicating the number of degrees of freedom.

- lower.tail: If TRUE (default), probabilities are #\mathbb{P}(X \leq x)#, otherwise, #\mathbb{P}(X \gt x)#.

Since we are dealing with a two-tailed #t#-test, run the following command to calculate the #p#-value:

\[

2 \text{ * } \text{pt}(q = \text{abs}(t), df = \text{min}(n_1 \text{ - } 1,n_2 \text{ - } 1), lower.tail = \text{FALSE})\\

\downarrow\\

2\text{ * } \text{pt}(q = \text{abs}(\text{-}1.09886), df = 35, lower.tail = \text{FALSE})

\]

This gives:

\[p = 0.279\]

Since #\,p# #\gt# #\alpha#, #H_0: \mu_1 - \mu_2 = 0# should not be rejected.

Or visit omptest.org if jou are taking an OMPT exam.